Forecaster with Deep Learning¶

Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM)¶

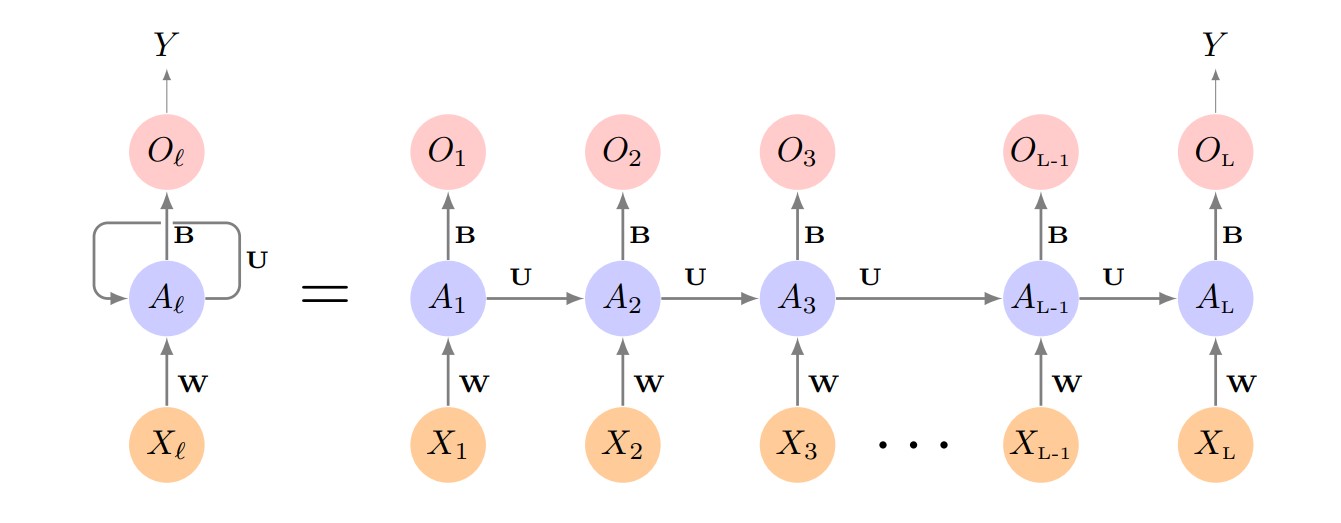

Recurrent Neural Networks (RNN) are a type of neural networks designed to process data that follows a sequential order. In conventional neural networks, such as feedforward networks, information flows in one direction, from input to output through hidden layers, without considering the sequential structure of the data. In contrast, RNNs maintain internal states or memories, which allow them to remember past information and use it to predict future data in the sequence.

The basic unit of an RNN is the recurrent cell. This cell takes two inputs: the current input and the previous hidden state. The hidden state can be understood as a "memory" that retains information from previous iterations. The current input and the previous hidden state are combined to calculate the current output and the new hidden state. This output is used as input for the next iteration, along with the next input in the data sequence.

Despite the advances that have been achieved with RNN architectures, they have limitations to capture long-term patterns. This is why variants such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) have been developed, which address these problems and allow long-term information to be retained more effectively.

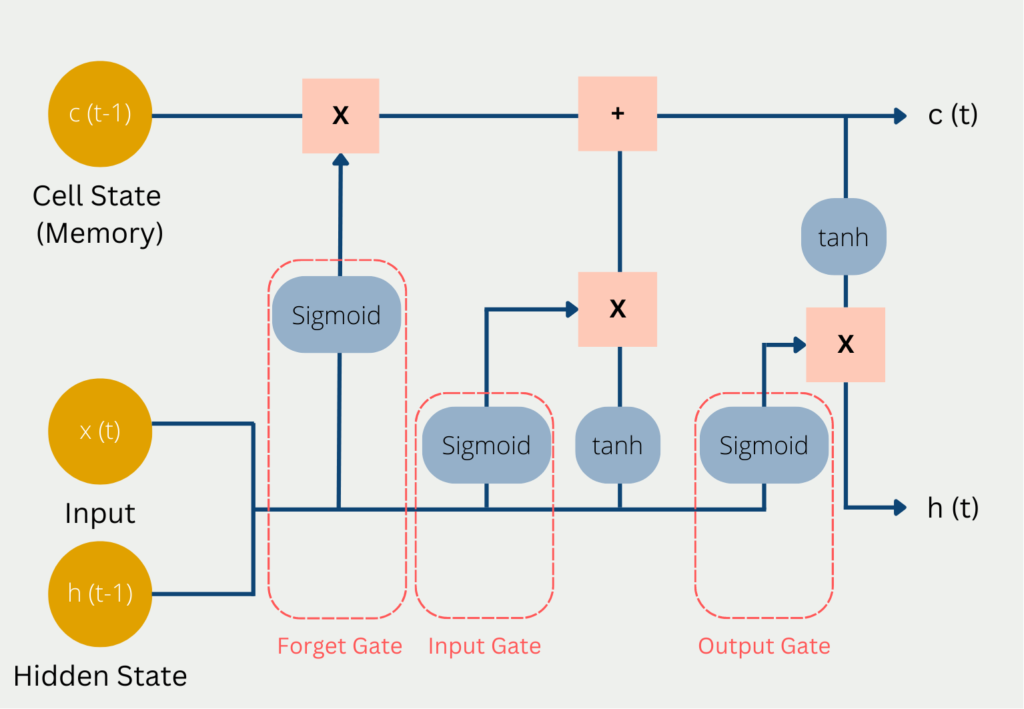

Long Short-Term Memory (LSTM) neural networks are a specialized type of RNNs designed to overcome the limitations associated with capturing long-term temporal dependencies. Unlike traditional RNNs, LSTMs incorporate a more complex architecture, introducing memory units and gate mechanisms to improve information management over time.

Structure of LSTMs

LSTMs have a modular structure consisting of three fundamental gates: the forget gate, the input gate, and the output gate. These gates work together to regulate the flow of information through the memory unit, allowing for more precise control over what information to retain and what to forget.

Forget Gate: Regulates how much information should be forgotten and how much should be retained, combining the current input and the previous output through a sigmoid function.

Input Gate: Decides how much new information should be added to long-term memory.

Output Gate: Determines how much information from the current memory will be used for the final output, combining the current input and memory information through a sigmoid function.

💡 Tip

To learn more about forecasting with deep learning models visit our examples:

Libraries and data¶

💡 Tip: Configuring your backend

As of Skforecast version 0.13.0, PyTorch backend support is available. You can configure the backend by exporting the KERAS_BACKEND environment variable or by editing your local configuration file at ~/.keras/keras.json. The available backend options are: "tensorflow" and "torch". Example:

import os

os.environ["KERAS_BACKEND"] = "torch"

import keras

⚠ Warning

Note: The backend must be configured before importing Keras, and the backend cannot be changed after the package has been imported.

# Libraries

# ==============================================================================

import os

os.environ["KERAS_BACKEND"] = "torch" # 'tensorflow', 'jax´ or 'torch'

import keras

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

import skforecast

from skforecast.plot import set_dark_theme

from skforecast.datasets import fetch_dataset

from skforecast.deep_learning import ForecasterRnn

from skforecast.deep_learning.utils import create_and_compile_model

from skforecast.model_selection import TimeSeriesFold

from skforecast.model_selection import backtesting_forecaster_multiseries

from keras.optimizers import Adam

from keras.losses import MeanSquaredError

from keras.callbacks import EarlyStopping

import warnings

warnings.filterwarnings('once')

print(f"skforecast version: {skforecast.__version__}")

print(f"keras version: {keras.__version__}")

if keras.__version__ > "3.0":

print(f"Using backend: {keras.backend.backend()}")

if keras.backend.backend() == "tensorflow":

import tensorflow

print(f"tensorflow version: {tensorflow.__version__}")

elif keras.backend.backend() == "torch":

import torch

print(f"torch version: {torch.__version__}")

else:

print("Backend not recognized. Please use 'tensorflow' or 'torch'.")

skforecast version: 0.14.0 keras version: 3.6.0 Using backend: torch torch version: 2.2.2+cpu

# Data download

# ==============================================================================

air_quality = fetch_dataset(name="air_quality_valencia_no_missing")

air_quality.head()

air_quality_valencia_no_missing ------------------------------- Hourly measures of several air chemical pollutant at Valencia city (Avd. Francia) from 2019-01-01 to 20213-12-31. Including the following variables: pm2.5 (µg/m³), CO (mg/m³), NO (µg/m³), NO2 (µg/m³), PM10 (µg/m³), NOx (µg/m³), O3 (µg/m³), Veloc. (m/s), Direc. (degrees), SO2 (µg/m³). Missing values have been imputed using linear interpolation. Red de Vigilancia y Control de la Contaminación Atmosférica, 46250047-València - Av. França, https://mediambient.gva.es/es/web/calidad-ambiental/datos- historicos. Shape of the dataset: (43824, 10)

| so2 | co | no | no2 | pm10 | nox | o3 | veloc. | direc. | pm2.5 | |

|---|---|---|---|---|---|---|---|---|---|---|

| datetime | ||||||||||

| 2019-01-01 00:00:00 | 8.0 | 0.2 | 3.0 | 36.0 | 22.0 | 40.0 | 16.0 | 0.5 | 262.0 | 19.0 |

| 2019-01-01 01:00:00 | 8.0 | 0.1 | 2.0 | 40.0 | 32.0 | 44.0 | 6.0 | 0.6 | 248.0 | 26.0 |

| 2019-01-01 02:00:00 | 8.0 | 0.1 | 11.0 | 42.0 | 36.0 | 58.0 | 3.0 | 0.3 | 224.0 | 31.0 |

| 2019-01-01 03:00:00 | 10.0 | 0.1 | 15.0 | 41.0 | 35.0 | 63.0 | 3.0 | 0.2 | 220.0 | 30.0 |

| 2019-01-01 04:00:00 | 11.0 | 0.1 | 16.0 | 39.0 | 36.0 | 63.0 | 3.0 | 0.4 | 221.0 | 30.0 |

# Checking the frequency of the time series

# ==============================================================================

print(f"Index: {air_quality.index.dtype}")

print(f"Frequency: {air_quality.index.freq}")

Index: datetime64[ns] Frequency: <Hour>

# Split train-validation-test

# ==============================================================================

air_quality = air_quality.loc["2019-01-01 00:00:00":"2021-12-31 23:59:59", :].copy()

end_train = "2021-03-31 23:59:00"

end_validation = "2021-09-30 23:59:00"

air_quality_train = air_quality.loc[:end_train, :].copy()

air_quality_val = air_quality.loc[end_train:end_validation, :].copy()

air_quality_test = air_quality.loc[end_validation:, :].copy()

print(

f"Dates train : {air_quality_train.index.min()} --- "

f"{air_quality_train.index.max()} (n={len(air_quality_train)})"

)

print(

f"Dates validation : {air_quality_val.index.min()} --- "

f"{air_quality_val.index.max()} (n={len(air_quality_val)})"

)

print(

f"Dates test : {air_quality_test.index.min()} --- "

f"{air_quality_test.index.max()} (n={len(air_quality_test)})"

)

Dates train : 2019-01-01 00:00:00 --- 2021-03-31 23:00:00 (n=19704) Dates validation : 2021-04-01 00:00:00 --- 2021-09-30 23:00:00 (n=4392) Dates test : 2021-10-01 00:00:00 --- 2021-12-31 23:00:00 (n=2208)

# Plotting one feature

# ==============================================================================

set_dark_theme()

fig, ax = plt.subplots(figsize=(8, 3))

air_quality_train["pm2.5"].rolling(100).mean().plot(ax=ax, label="train")

air_quality_val["pm2.5"].rolling(100).mean().plot(ax=ax, label="validation")

air_quality_test["pm2.5"].rolling(100).mean().plot(ax=ax, label="test")

ax.set_title("pm2.5")

ax.legend();

Types of problems in time series modeling¶

1:1 Single-Step Forecasting - Predict one step ahead of a single series using the same series as predictor.¶

This type of problem involves modeling a time series using only its own past. It is a typical autoregressive problem.

Although tensorflow-keras facilitates the process of creating deep learning architectures, it is not always trivial to determine the Xtrain and Ytrain dimensions requiered to run an LSTM model. The dimensions depend on how many time series are being modeled, how many of them are are used as predictors, and the length of the prediction horizon.

✎ Note

The create_and_compile_model function is designed to facilitate the creation of the Tensorflow model. Advanced users can create their own architectures and pass them to the skforecast RNN Forecaster. Input and output dimensions must match the use case to which the model will be applied. the Annex at the end of the document for more details.

# Create model

# ==============================================================================

series = ["o3"] # Series used as predictors

levels = ["o3"] # Target series to predict

lags = 32 # Past time steps to be used to predict the target

steps = 1 # Future time steps to be predicted

data = air_quality[series].copy()

data_train = air_quality_train[series].copy()

data_val = air_quality_val[series].copy()

data_test = air_quality_test[series].copy()

model = create_and_compile_model(

series=data_train,

levels=levels,

lags=lags,

steps=steps,

recurrent_layer="LSTM",

recurrent_units=4,

dense_units=16,

optimizer=Adam(learning_rate=0.01),

loss=MeanSquaredError()

)

model.summary()

keras version: 3.6.0 Using backend: torch torch version: 2.2.2+cpu

Model: "functional"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ input_layer (InputLayer) │ (None, 32, 1) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm (LSTM) │ (None, 4) │ 96 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense (Dense) │ (None, 16) │ 80 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_1 (Dense) │ (None, 1) │ 17 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ reshape (Reshape) │ (None, 1, 1) │ 0 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 193 (772.00 B)

Trainable params: 193 (772.00 B)

Non-trainable params: 0 (0.00 B)

# Forecaster Definition

# ==============================================================================

forecaster = ForecasterRnn(

regressor=model,

levels=levels,

transformer_series=MinMaxScaler(),

fit_kwargs={

"epochs": 3, # Number of epochs to train the model.

"batch_size": 32, # Batch size to train the model.

"callbacks": [

EarlyStopping(monitor="val_loss", patience=5)

], # Callback to stop training when it is no longer learning.

"series_val": data_val, # Validation data for model training.

},

)

forecaster

c:\Users\jaesc2\Miniconda3\envs\skforecast_py11_2\Lib\site-packages\skforecast\deep_learning\_forecaster_rnn.py:229: UserWarning: Setting `lags` = 'auto'. `lags` are inferred from the regressor architecture. Avoid the warning with lags=lags. warnings.warn( c:\Users\jaesc2\Miniconda3\envs\skforecast_py11_2\Lib\site-packages\skforecast\deep_learning\_forecaster_rnn.py:264: UserWarning: `steps` default value = 'auto'. `steps` inferred from regressor architecture. Avoid the warning with steps=steps. warnings.warn(

=============

ForecasterRnn

=============

Regressor: <Functional name=functional, built=True>

Lags: [ 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

25 26 27 28 29 30 31 32]

Transformer for series: MinMaxScaler()

Window size: 32

Target series, levels: ['o3']

Multivariate series (names): None

Maximum steps predicted: [1]

Training range: None

Training index type: None

Training index frequency: None

Model parameters: {'name': 'functional', 'trainable': True, 'layers': [{'module': 'keras.layers', 'class_name': 'InputLayer', 'config': {'batch_shape': (None, 32, 1), 'dtype': 'float32', 'sparse': False, 'name': 'input_layer'}, 'registered_name': None, 'name': 'input_layer', 'inbound_nodes': []}, {'module': 'keras.layers', 'class_name': 'LSTM', 'config': {'name': 'lstm', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'return_sequences': False, 'return_state': False, 'go_backwards': False, 'stateful': False, 'unroll': False, 'zero_output_for_mask': False, 'units': 4, 'activation': 'relu', 'recurrent_activation': 'sigmoid', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'recurrent_initializer': {'module': 'keras.initializers', 'class_name': 'OrthogonalInitializer', 'config': {'seed': None, 'gain': 1.0}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'unit_forget_bias': True, 'kernel_regularizer': None, 'recurrent_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'recurrent_constraint': None, 'bias_constraint': None, 'dropout': 0.0, 'recurrent_dropout': 0.0, 'seed': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32, 1)}, 'name': 'lstm', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32, 1), 'dtype': 'float32', 'keras_history': ['input_layer', 0, 0]}},), 'kwargs': {'training': False, 'mask': None}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 16, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 4)}, 'name': 'dense', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 4), 'dtype': 'float32', 'keras_history': ['lstm', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_1', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 1, 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 16)}, 'name': 'dense_1', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 16), 'dtype': 'float32', 'keras_history': ['dense', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Reshape', 'config': {'name': 'reshape', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'target_shape': (1, 1)}, 'registered_name': None, 'build_config': {'input_shape': (None, 1)}, 'name': 'reshape', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 1), 'dtype': 'float32', 'keras_history': ['dense_1', 0, 0]}},), 'kwargs': {}}]}], 'input_layers': [['input_layer', 0, 0]], 'output_layers': [['reshape', 0, 0]]}

Compile parameters: {'optimizer': {'module': 'keras.src.backend.torch.optimizers.torch_adam', 'class_name': 'Adam', 'config': {'name': 'adam', 'learning_rate': 0.009999999776482582, 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'loss_scale_factor': None, 'gradient_accumulation_steps': None, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}, 'registered_name': 'Adam'}, 'loss': {'module': 'keras.losses', 'class_name': 'MeanSquaredError', 'config': {'name': 'mean_squared_error', 'reduction': 'sum_over_batch_size'}, 'registered_name': None}, 'loss_weights': None, 'metrics': None, 'weighted_metrics': None, 'run_eagerly': False, 'steps_per_execution': 1, 'jit_compile': False}

fit_kwargs: {'epochs': 3, 'batch_size': 32, 'callbacks': [<keras.src.callbacks.early_stopping.EarlyStopping object at 0x0000022488FB7BD0>]}

Creation date: 2024-11-10 16:43:14

Last fit date: None

Skforecast version: 0.14.0

Python version: 3.11.10

Forecaster id: None

⚠ Warning

The output warning indicates that the number of lags has been inferred from the model architecture. In this case, the model has an LSTM layer with 32 neurons, so the number of lags is 32. If a different number of lags is desired, the lags argument can be specified in the create_and_compile_model function.

To omit the warning, set lags=lags and steps=steps arguments can be specified in the initialization of the ForecasterRnn.

# Fit forecaster

# ==============================================================================

forecaster.fit(data_train)

Epoch 1/3 615/615 ━━━━━━━━━━━━━━━━━━━━ 12s 19ms/step - loss: 0.0195 - val_loss: 0.0062 Epoch 2/3 615/615 ━━━━━━━━━━━━━━━━━━━━ 12s 19ms/step - loss: 0.0055 - val_loss: 0.0064 Epoch 3/3 615/615 ━━━━━━━━━━━━━━━━━━━━ 12s 19ms/step - loss: 0.0057 - val_loss: 0.0056

Overfitting can be tracked by moniting the loss function on the validation set. Metrics are automatically stored in the history attribute of the ForecasterRnn object. The method plot_history can be used to visualize the training and validation loss.

# Track training and overfitting

# ==============================================================================

fig, ax = plt.subplots(figsize=(6, 2.5))

forecaster.plot_history(ax=ax)

# Predictions

# ==============================================================================

predictions = forecaster.predict()

predictions

| o3 | |

|---|---|

| 2021-04-01 | 47.126778 |

# Backtesting with test data

# ==============================================================================

cv = TimeSeriesFold(

steps = forecaster.max_step,

initial_train_size = len(data.loc[:end_validation, :]), # Training + Validation Data

refit = False

)

metrics, predictions = backtesting_forecaster_multiseries(

forecaster=forecaster,

series=data,

cv=cv,

levels=forecaster.levels,

metric="mean_absolute_error",

verbose=False # Set to True to print detailed information

)

Epoch 1/3 752/752 ━━━━━━━━━━━━━━━━━━━━ 15s 20ms/step - loss: 0.0055 - val_loss: 0.0056 Epoch 2/3 752/752 ━━━━━━━━━━━━━━━━━━━━ 15s 20ms/step - loss: 0.0055 - val_loss: 0.0055 Epoch 3/3 752/752 ━━━━━━━━━━━━━━━━━━━━ 17s 22ms/step - loss: 0.0055 - val_loss: 0.0067

0%| | 0/2208 [00:00<?, ?it/s]

# Backtesting predictions

# ==============================================================================

predictions

| o3 | |

|---|---|

| 2021-10-01 00:00:00 | 50.874161 |

| 2021-10-01 01:00:00 | 55.251842 |

| 2021-10-01 02:00:00 | 59.464100 |

| 2021-10-01 03:00:00 | 59.610218 |

| 2021-10-01 04:00:00 | 50.088608 |

| ... | ... |

| 2021-12-31 19:00:00 | 16.385061 |

| 2021-12-31 20:00:00 | 14.317945 |

| 2021-12-31 21:00:00 | 16.142521 |

| 2021-12-31 22:00:00 | 16.028374 |

| 2021-12-31 23:00:00 | 17.218546 |

2208 rows × 1 columns

# % Error vs series mean

# ==============================================================================

rel_mse = 100 * metrics.loc[0, 'mean_absolute_error'] / np.mean(data["o3"])

print(f"Serie mean: {np.mean(data['o3']):0.2f}")

print(f"Relative error (mae): {rel_mse:0.2f} %")

Serie mean: 54.52 Relative error (mae): 11.41 %

# Plotting predictions vs real values in the test set

# ==============================================================================

fig, ax = plt.subplots(figsize=(8, 3))

data_test["o3"].plot(ax=ax, label="test")

predictions["o3"].plot(ax=ax, label="predictions")

ax.set_title("O3")

ax.legend();

1:1 Multiple-Step Forecasting - Predict a single series using the same series as predictor. Multiple steps ahead.¶

The next case is similar to the previous one, but now the goal is to predict multiple future values. In this scenario multiple future steps of a single time series are modeled using only its past values.

A similar architecture to the previous one will be used, but with a greater number of neurons in the LSTM layer and in the first dense layer. This will allow the model to have greater flexibility to predict the time series.

# Model creation

# ==============================================================================

series = ["o3"] # Series used as predictors

levels = ["o3"] # Target series to predict

lags = 32 # Past time steps to be used to predict the target

steps = 5 # Future time steps to be predicted

model = create_and_compile_model(

series=data_train,

levels=levels,

lags=lags,

steps=steps,

recurrent_layer="LSTM",

recurrent_units=50,

dense_units=32,

optimizer=Adam(learning_rate=0.01),

loss=MeanSquaredError()

)

model.summary()

keras version: 3.6.0 Using backend: torch torch version: 2.2.2+cpu

Model: "functional_1"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ input_layer_1 (InputLayer) │ (None, 32, 1) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm_1 (LSTM) │ (None, 50) │ 10,400 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_2 (Dense) │ (None, 32) │ 1,632 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_3 (Dense) │ (None, 5) │ 165 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ reshape_1 (Reshape) │ (None, 5, 1) │ 0 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 12,197 (47.64 KB)

Trainable params: 12,197 (47.64 KB)

Non-trainable params: 0 (0.00 B)

# Forecaster Creation

# ==============================================================================

forecaster = ForecasterRnn(

regressor=model,

levels=levels,

transformer_series=MinMaxScaler(),

fit_kwargs={

"epochs": 3, # Number of epochs to train the model.

"batch_size": 32, # Batch size to train the model.

"callbacks": [

EarlyStopping(monitor="val_loss", patience=5)

], # Callback to stop training when it is no longer learning.

"series_val": data_val, # Validation data for model training.

},

)

forecaster

c:\Users\jaesc2\Miniconda3\envs\skforecast_py11_2\Lib\site-packages\skforecast\deep_learning\_forecaster_rnn.py:229: UserWarning: Setting `lags` = 'auto'. `lags` are inferred from the regressor architecture. Avoid the warning with lags=lags. warnings.warn( c:\Users\jaesc2\Miniconda3\envs\skforecast_py11_2\Lib\site-packages\skforecast\deep_learning\_forecaster_rnn.py:264: UserWarning: `steps` default value = 'auto'. `steps` inferred from regressor architecture. Avoid the warning with steps=steps. warnings.warn(

=============

ForecasterRnn

=============

Regressor: <Functional name=functional_1, built=True>

Lags: [ 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

25 26 27 28 29 30 31 32]

Transformer for series: MinMaxScaler()

Window size: 32

Target series, levels: ['o3']

Multivariate series (names): None

Maximum steps predicted: [1 2 3 4 5]

Training range: None

Training index type: None

Training index frequency: None

Model parameters: {'name': 'functional_1', 'trainable': True, 'layers': [{'module': 'keras.layers', 'class_name': 'InputLayer', 'config': {'batch_shape': (None, 32, 1), 'dtype': 'float32', 'sparse': False, 'name': 'input_layer_1'}, 'registered_name': None, 'name': 'input_layer_1', 'inbound_nodes': []}, {'module': 'keras.layers', 'class_name': 'LSTM', 'config': {'name': 'lstm_1', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'return_sequences': False, 'return_state': False, 'go_backwards': False, 'stateful': False, 'unroll': False, 'zero_output_for_mask': False, 'units': 50, 'activation': 'relu', 'recurrent_activation': 'sigmoid', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'recurrent_initializer': {'module': 'keras.initializers', 'class_name': 'OrthogonalInitializer', 'config': {'seed': None, 'gain': 1.0}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'unit_forget_bias': True, 'kernel_regularizer': None, 'recurrent_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'recurrent_constraint': None, 'bias_constraint': None, 'dropout': 0.0, 'recurrent_dropout': 0.0, 'seed': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32, 1)}, 'name': 'lstm_1', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32, 1), 'dtype': 'float32', 'keras_history': ['input_layer_1', 0, 0]}},), 'kwargs': {'training': False, 'mask': None}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_2', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 32, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 50)}, 'name': 'dense_2', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 50), 'dtype': 'float32', 'keras_history': ['lstm_1', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_3', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 5, 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32)}, 'name': 'dense_3', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32), 'dtype': 'float32', 'keras_history': ['dense_2', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Reshape', 'config': {'name': 'reshape_1', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'target_shape': (5, 1)}, 'registered_name': None, 'build_config': {'input_shape': (None, 5)}, 'name': 'reshape_1', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 5), 'dtype': 'float32', 'keras_history': ['dense_3', 0, 0]}},), 'kwargs': {}}]}], 'input_layers': [['input_layer_1', 0, 0]], 'output_layers': [['reshape_1', 0, 0]]}

Compile parameters: {'optimizer': {'module': 'keras.src.backend.torch.optimizers.torch_adam', 'class_name': 'Adam', 'config': {'name': 'adam', 'learning_rate': 0.009999999776482582, 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'loss_scale_factor': None, 'gradient_accumulation_steps': None, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}, 'registered_name': 'Adam'}, 'loss': {'module': 'keras.losses', 'class_name': 'MeanSquaredError', 'config': {'name': 'mean_squared_error', 'reduction': 'sum_over_batch_size'}, 'registered_name': None}, 'loss_weights': None, 'metrics': None, 'weighted_metrics': None, 'run_eagerly': False, 'steps_per_execution': 1, 'jit_compile': False}

fit_kwargs: {'epochs': 3, 'batch_size': 32, 'callbacks': [<keras.src.callbacks.early_stopping.EarlyStopping object at 0x000002248B373690>]}

Creation date: 2024-11-10 16:47:52

Last fit date: None

Skforecast version: 0.14.0

Python version: 3.11.10

Forecaster id: None

✎ Note

The fit_kwargs parameter allows the user to set any Tensorflow-based configuration in the model. In the example, 10 training epochs are defined with a batch size of 32. An EarlyStopping callback is configured to stop training when the validation loss stops decreasing for 5 epochs (patience=5). Other callbacks can also be configured, such as ModelCheckpoint to save the model at each epoch, or even Tensorboard to visualize the training and validation loss in real time.

# Fit forecaster

# ==============================================================================

forecaster.fit(data_train)

Epoch 1/3 615/615 ━━━━━━━━━━━━━━━━━━━━ 19s 30ms/step - loss: 0.0333 - val_loss: 0.0160 Epoch 2/3 615/615 ━━━━━━━━━━━━━━━━━━━━ 18s 29ms/step - loss: 0.0159 - val_loss: 0.0124 Epoch 3/3 615/615 ━━━━━━━━━━━━━━━━━━━━ 18s 30ms/step - loss: 0.0134 - val_loss: 0.0115

# Train and overfitting tracking

# ==============================================================================

fig, ax = plt.subplots(figsize=(6, 2.5))

forecaster.plot_history(ax=ax)

# Prediction

# ==============================================================================

predictions = forecaster.predict()

predictions

| o3 | |

|---|---|

| 2021-04-01 00:00:00 | 45.935574 |

| 2021-04-01 01:00:00 | 38.276730 |

| 2021-04-01 02:00:00 | 35.467434 |

| 2021-04-01 03:00:00 | 34.144344 |

| 2021-04-01 04:00:00 | 34.432968 |

# Specific step predictions

# ==============================================================================

predictions = forecaster.predict(steps=[1, 3])

predictions

| o3 | |

|---|---|

| 2021-04-01 00:00:00 | 45.935574 |

| 2021-04-01 02:00:00 | 35.467434 |

# Backtesting

# ==============================================================================

cv = TimeSeriesFold(

steps = forecaster.max_step,

initial_train_size = len(data.loc[:end_validation, :]), # Training + Validation Data

refit = False

)

metrics, predictions = backtesting_forecaster_multiseries(

forecaster=forecaster,

series=data,

cv=cv,

levels=forecaster.levels,

metric="mean_absolute_error",

verbose=False

)

Epoch 1/3 752/752 ━━━━━━━━━━━━━━━━━━━━ 21s 28ms/step - loss: 0.0121 - val_loss: 0.0115 Epoch 2/3 752/752 ━━━━━━━━━━━━━━━━━━━━ 21s 28ms/step - loss: 0.0117 - val_loss: 0.0138 Epoch 3/3 752/752 ━━━━━━━━━━━━━━━━━━━━ 22s 30ms/step - loss: 0.0118 - val_loss: 0.0137

0%| | 0/442 [00:00<?, ?it/s]

# Backtesting predictions

# ==============================================================================

predictions

| o3 | |

|---|---|

| 2021-10-01 00:00:00 | 59.022354 |

| 2021-10-01 01:00:00 | 57.059414 |

| 2021-10-01 02:00:00 | 55.824009 |

| 2021-10-01 03:00:00 | 51.360603 |

| 2021-10-01 04:00:00 | 46.362068 |

| ... | ... |

| 2021-12-31 19:00:00 | 24.225929 |

| 2021-12-31 20:00:00 | 21.542786 |

| 2021-12-31 21:00:00 | 17.000990 |

| 2021-12-31 22:00:00 | 18.575756 |

| 2021-12-31 23:00:00 | 21.672462 |

2208 rows × 1 columns

# Plotting predictions vs real values in the test set

# ==============================================================================

fig, ax = plt.subplots(figsize=(8, 3))

data_test["o3"].plot(ax=ax, label="test")

predictions["o3"].plot(ax=ax, label="predictions")

ax.set_title("O3")

ax.legend();

# Backtesting metrics

# ==============================================================================

metrics

| levels | mean_absolute_error | |

|---|---|---|

| 0 | o3 | 10.462818 |

# % Error vs series mean

# ==============================================================================

rel_mse = 100 * metrics.loc[0, 'mean_absolute_error'] / np.mean(data["o3"])

print(f"Serie mean: {np.mean(data['o3']):0.2f}")

print(f"Relative error (mae): {rel_mse:0.2f} %")

Serie mean: 54.52 Relative error (mae): 19.19 %

N:1 Multiple-Step Forecasting - Predict one series using multiple series as predictors.¶

In this case, a single series will be predicted, but using multiple time series as predictors. Now, the past values of multiple time series will influence the prediction of a single time series.

# Model creation

# ==============================================================================

# Time series used in the training. Now, it is multiseries

series = ['pm2.5', 'co', 'no', 'no2', 'pm10', 'nox', 'o3', 'veloc.', 'direc.', 'so2']

levels = ["o3"]

lags = 32

steps = 5

data = air_quality[series].copy()

data_train = air_quality_train[series].copy()

data_val = air_quality_val[series].copy()

data_test = air_quality_test[series].copy()

model = create_and_compile_model(

series=data_train,

levels=levels,

lags=lags,

steps=steps,

recurrent_layer="LSTM",

recurrent_units=[100, 50],

dense_units=[64, 32],

optimizer=Adam(learning_rate=0.01),

loss=MeanSquaredError()

)

model.summary()

keras version: 3.6.0 Using backend: torch torch version: 2.2.2+cpu

Model: "functional_2"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ input_layer_2 (InputLayer) │ (None, 32, 10) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm_2 (LSTM) │ (None, 32, 100) │ 44,400 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm_3 (LSTM) │ (None, 50) │ 30,200 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_4 (Dense) │ (None, 64) │ 3,264 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_5 (Dense) │ (None, 32) │ 2,080 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_6 (Dense) │ (None, 5) │ 165 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ reshape_2 (Reshape) │ (None, 5, 1) │ 0 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 80,109 (312.93 KB)

Trainable params: 80,109 (312.93 KB)

Non-trainable params: 0 (0.00 B)

# Forecaster creation

# ==============================================================================

forecaster = ForecasterRnn(

regressor=model,

levels=levels,

steps=steps,

lags=lags,

transformer_series=MinMaxScaler(),

fit_kwargs={

"epochs": 4,

"batch_size": 128,

"series_val": data_val,

},

)

forecaster

=============

ForecasterRnn

=============

Regressor: <Functional name=functional_2, built=True>

Lags: [ 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

25 26 27 28 29 30 31 32]

Transformer for series: MinMaxScaler()

Window size: 32

Target series, levels: ['o3']

Multivariate series (names): None

Maximum steps predicted: [1 2 3 4 5]

Training range: None

Training index type: None

Training index frequency: None

Model parameters: {'name': 'functional_2', 'trainable': True, 'layers': [{'module': 'keras.layers', 'class_name': 'InputLayer', 'config': {'batch_shape': (None, 32, 10), 'dtype': 'float32', 'sparse': False, 'name': 'input_layer_2'}, 'registered_name': None, 'name': 'input_layer_2', 'inbound_nodes': []}, {'module': 'keras.layers', 'class_name': 'LSTM', 'config': {'name': 'lstm_2', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'return_sequences': True, 'return_state': False, 'go_backwards': False, 'stateful': False, 'unroll': False, 'zero_output_for_mask': False, 'units': 100, 'activation': 'relu', 'recurrent_activation': 'sigmoid', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'recurrent_initializer': {'module': 'keras.initializers', 'class_name': 'OrthogonalInitializer', 'config': {'seed': None, 'gain': 1.0}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'unit_forget_bias': True, 'kernel_regularizer': None, 'recurrent_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'recurrent_constraint': None, 'bias_constraint': None, 'dropout': 0.0, 'recurrent_dropout': 0.0, 'seed': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32, 10)}, 'name': 'lstm_2', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32, 10), 'dtype': 'float32', 'keras_history': ['input_layer_2', 0, 0]}},), 'kwargs': {'training': False, 'mask': None}}]}, {'module': 'keras.layers', 'class_name': 'LSTM', 'config': {'name': 'lstm_3', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'return_sequences': False, 'return_state': False, 'go_backwards': False, 'stateful': False, 'unroll': False, 'zero_output_for_mask': False, 'units': 50, 'activation': 'relu', 'recurrent_activation': 'sigmoid', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'recurrent_initializer': {'module': 'keras.initializers', 'class_name': 'OrthogonalInitializer', 'config': {'seed': None, 'gain': 1.0}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'unit_forget_bias': True, 'kernel_regularizer': None, 'recurrent_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'recurrent_constraint': None, 'bias_constraint': None, 'dropout': 0.0, 'recurrent_dropout': 0.0, 'seed': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32, 100)}, 'name': 'lstm_3', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32, 100), 'dtype': 'float32', 'keras_history': ['lstm_2', 0, 0]}},), 'kwargs': {'training': False, 'mask': None}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_4', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 64, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 50)}, 'name': 'dense_4', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 50), 'dtype': 'float32', 'keras_history': ['lstm_3', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_5', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 32, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 64)}, 'name': 'dense_5', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 64), 'dtype': 'float32', 'keras_history': ['dense_4', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_6', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 5, 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32)}, 'name': 'dense_6', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32), 'dtype': 'float32', 'keras_history': ['dense_5', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Reshape', 'config': {'name': 'reshape_2', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'target_shape': (5, 1)}, 'registered_name': None, 'build_config': {'input_shape': (None, 5)}, 'name': 'reshape_2', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 5), 'dtype': 'float32', 'keras_history': ['dense_6', 0, 0]}},), 'kwargs': {}}]}], 'input_layers': [['input_layer_2', 0, 0]], 'output_layers': [['reshape_2', 0, 0]]}

Compile parameters: {'optimizer': {'module': 'keras.src.backend.torch.optimizers.torch_adam', 'class_name': 'Adam', 'config': {'name': 'adam', 'learning_rate': 0.009999999776482582, 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'loss_scale_factor': None, 'gradient_accumulation_steps': None, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}, 'registered_name': 'Adam'}, 'loss': {'module': 'keras.losses', 'class_name': 'MeanSquaredError', 'config': {'name': 'mean_squared_error', 'reduction': 'sum_over_batch_size'}, 'registered_name': None}, 'loss_weights': None, 'metrics': None, 'weighted_metrics': None, 'run_eagerly': False, 'steps_per_execution': 1, 'jit_compile': False}

fit_kwargs: {'epochs': 4, 'batch_size': 128}

Creation date: 2024-11-10 16:54:30

Last fit date: None

Skforecast version: 0.14.0

Python version: 3.11.10

Forecaster id: None

# Fit forecaster

# ==============================================================================

forecaster.fit(data_train)

Epoch 1/4 154/154 ━━━━━━━━━━━━━━━━━━━━ 15s 95ms/step - loss: 67.4348 - val_loss: 0.0299 Epoch 2/4 154/154 ━━━━━━━━━━━━━━━━━━━━ 14s 91ms/step - loss: 0.0201 - val_loss: 0.0184 Epoch 3/4 154/154 ━━━━━━━━━━━━━━━━━━━━ 13s 87ms/step - loss: 0.0142 - val_loss: 0.0147 Epoch 4/4 154/154 ━━━━━━━━━━━━━━━━━━━━ 14s 91ms/step - loss: 0.0119 - val_loss: 0.0134

# Trainig and overfitting tracking

# ==============================================================================

fig, ax = plt.subplots(figsize=(7, 3))

forecaster.plot_history(ax=ax)

# Prediction

# ==============================================================================

predictions = forecaster.predict()

predictions

| o3 | |

|---|---|

| 2021-04-01 00:00:00 | 49.559605 |

| 2021-04-01 01:00:00 | 45.086384 |

| 2021-04-01 02:00:00 | 40.562786 |

| 2021-04-01 03:00:00 | 37.500679 |

| 2021-04-01 04:00:00 | 34.021168 |

# Backtesting with test data

# ==============================================================================

cv = TimeSeriesFold(

steps = forecaster.max_step,

initial_train_size = len(data.loc[:end_validation, :]), # Training + Validation Data

refit = False

)

metrics, predictions = backtesting_forecaster_multiseries(

forecaster=forecaster,

series=data,

cv=cv,

levels=forecaster.levels,

metric="mean_absolute_error",

verbose=False

)

Epoch 1/4 188/188 ━━━━━━━━━━━━━━━━━━━━ 17s 92ms/step - loss: 0.0107 - val_loss: 0.0133 Epoch 2/4 188/188 ━━━━━━━━━━━━━━━━━━━━ 21s 111ms/step - loss: 0.0108 - val_loss: 0.0118 Epoch 3/4 188/188 ━━━━━━━━━━━━━━━━━━━━ 25s 130ms/step - loss: 0.0099 - val_loss: 0.0121 Epoch 4/4 188/188 ━━━━━━━━━━━━━━━━━━━━ 20s 109ms/step - loss: 0.0102 - val_loss: 0.0112

0%| | 0/442 [00:00<?, ?it/s]

# Backtesting metrics

# ==============================================================================

metrics

| levels | mean_absolute_error | |

|---|---|---|

| 0 | o3 | 10.145644 |

# % Error vs series mean

# ==============================================================================

rel_mse = 100 * metrics.loc[0, 'mean_absolute_error'] / np.mean(data["o3"])

print(f"Serie mean: {np.mean(data['o3']):0.2f}")

print(f"Relative error (mae): {rel_mse:0.2f} %")

Serie mean: 54.52 Relative error (mae): 18.61 %

# Backtesting predictions

# ==============================================================================

predictions

| o3 | |

|---|---|

| 2021-10-01 00:00:00 | 52.590939 |

| 2021-10-01 01:00:00 | 47.559982 |

| 2021-10-01 02:00:00 | 41.787987 |

| 2021-10-01 03:00:00 | 35.704887 |

| 2021-10-01 04:00:00 | 30.938972 |

| ... | ... |

| 2021-12-31 19:00:00 | 13.992397 |

| 2021-12-31 20:00:00 | 7.128738 |

| 2021-12-31 21:00:00 | 5.099380 |

| 2021-12-31 22:00:00 | 3.405155 |

| 2021-12-31 23:00:00 | 4.540741 |

2208 rows × 1 columns

# Plotting predictions vs real values in the test set

# ==============================================================================

fig, ax = plt.subplots(figsize=(8, 3))

data_test["o3"].plot(ax=ax, label="test")

predictions["o3"].plot(ax=ax, label="predictions")

ax.set_title("O3")

ax.legend();

N:M Multiple-Step Forecasting - Multiple time series with multiple outputs¶

This is a more complex scenario in which multiple time series are predicted using multiple time series as predictors. It is therefore a scenario in which multiple series are modeled simultaneously using a single model. This has special application in many real scenarios, such as the prediction of stock values for several companies based on the stock history, the price of energy and commodities. Or the case of forecasting multiple products in an online store, based on the sales of other products, the price of the products, etc.

# Model creation

# ==============================================================================

# Now, we have multiple series and multiple targets

series = ['pm2.5', 'co', 'no', 'no2', 'pm10', 'nox', 'o3', 'veloc.', 'direc.', 'so2']

levels = ['pm2.5', 'co', 'no', "o3"] # Features to predict. It can be all the series or less

lags = 32

steps = 5

data = air_quality[series].copy()

data_train = air_quality_train[series].copy()

data_val = air_quality_val[series].copy()

data_test = air_quality_test[series].copy()

model = create_and_compile_model(

series=data_train,

levels=levels,

lags=lags,

steps=steps,

recurrent_layer="LSTM",

recurrent_units=[100, 50],

dense_units=[64, 32],

optimizer=Adam(learning_rate=0.01),

loss=MeanSquaredError()

)

model.summary()

keras version: 3.6.0 Using backend: torch torch version: 2.2.2+cpu

Model: "functional_3"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ input_layer_3 (InputLayer) │ (None, 32, 10) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm_4 (LSTM) │ (None, 32, 100) │ 44,400 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm_5 (LSTM) │ (None, 50) │ 30,200 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_7 (Dense) │ (None, 64) │ 3,264 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_8 (Dense) │ (None, 32) │ 2,080 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_9 (Dense) │ (None, 20) │ 660 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ reshape_3 (Reshape) │ (None, 5, 4) │ 0 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 80,604 (314.86 KB)

Trainable params: 80,604 (314.86 KB)

Non-trainable params: 0 (0.00 B)

# Forecaster creation

# ==============================================================================

forecaster = ForecasterRnn(

regressor=model,

levels=levels,

steps=steps,

lags=lags,

transformer_series=MinMaxScaler(),

fit_kwargs={

"epochs": 10,

"batch_size": 128,

"callbacks": [

EarlyStopping(monitor="val_loss", patience=3)

],

"series_val": data_val,

},

)

forecaster

=============

ForecasterRnn

=============

Regressor: <Functional name=functional_3, built=True>

Lags: [ 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

25 26 27 28 29 30 31 32]

Transformer for series: MinMaxScaler()

Window size: 32

Target series, levels: ['pm2.5', 'co', 'no', 'o3']

Multivariate series (names): None

Maximum steps predicted: [1 2 3 4 5]

Training range: None

Training index type: None

Training index frequency: None

Model parameters: {'name': 'functional_3', 'trainable': True, 'layers': [{'module': 'keras.layers', 'class_name': 'InputLayer', 'config': {'batch_shape': (None, 32, 10), 'dtype': 'float32', 'sparse': False, 'name': 'input_layer_3'}, 'registered_name': None, 'name': 'input_layer_3', 'inbound_nodes': []}, {'module': 'keras.layers', 'class_name': 'LSTM', 'config': {'name': 'lstm_4', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'return_sequences': True, 'return_state': False, 'go_backwards': False, 'stateful': False, 'unroll': False, 'zero_output_for_mask': False, 'units': 100, 'activation': 'relu', 'recurrent_activation': 'sigmoid', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'recurrent_initializer': {'module': 'keras.initializers', 'class_name': 'OrthogonalInitializer', 'config': {'seed': None, 'gain': 1.0}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'unit_forget_bias': True, 'kernel_regularizer': None, 'recurrent_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'recurrent_constraint': None, 'bias_constraint': None, 'dropout': 0.0, 'recurrent_dropout': 0.0, 'seed': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32, 10)}, 'name': 'lstm_4', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32, 10), 'dtype': 'float32', 'keras_history': ['input_layer_3', 0, 0]}},), 'kwargs': {'training': False, 'mask': None}}]}, {'module': 'keras.layers', 'class_name': 'LSTM', 'config': {'name': 'lstm_5', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'return_sequences': False, 'return_state': False, 'go_backwards': False, 'stateful': False, 'unroll': False, 'zero_output_for_mask': False, 'units': 50, 'activation': 'relu', 'recurrent_activation': 'sigmoid', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'recurrent_initializer': {'module': 'keras.initializers', 'class_name': 'OrthogonalInitializer', 'config': {'seed': None, 'gain': 1.0}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'unit_forget_bias': True, 'kernel_regularizer': None, 'recurrent_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'recurrent_constraint': None, 'bias_constraint': None, 'dropout': 0.0, 'recurrent_dropout': 0.0, 'seed': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32, 100)}, 'name': 'lstm_5', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32, 100), 'dtype': 'float32', 'keras_history': ['lstm_4', 0, 0]}},), 'kwargs': {'training': False, 'mask': None}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_7', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 64, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 50)}, 'name': 'dense_7', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 50), 'dtype': 'float32', 'keras_history': ['lstm_5', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_8', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 32, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 64)}, 'name': 'dense_8', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 64), 'dtype': 'float32', 'keras_history': ['dense_7', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_9', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 20, 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32)}, 'name': 'dense_9', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32), 'dtype': 'float32', 'keras_history': ['dense_8', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Reshape', 'config': {'name': 'reshape_3', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'target_shape': (5, 4)}, 'registered_name': None, 'build_config': {'input_shape': (None, 20)}, 'name': 'reshape_3', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 20), 'dtype': 'float32', 'keras_history': ['dense_9', 0, 0]}},), 'kwargs': {}}]}], 'input_layers': [['input_layer_3', 0, 0]], 'output_layers': [['reshape_3', 0, 0]]}

Compile parameters: {'optimizer': {'module': 'keras.src.backend.torch.optimizers.torch_adam', 'class_name': 'Adam', 'config': {'name': 'adam', 'learning_rate': 0.009999999776482582, 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'loss_scale_factor': None, 'gradient_accumulation_steps': None, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}, 'registered_name': 'Adam'}, 'loss': {'module': 'keras.losses', 'class_name': 'MeanSquaredError', 'config': {'name': 'mean_squared_error', 'reduction': 'sum_over_batch_size'}, 'registered_name': None}, 'loss_weights': None, 'metrics': None, 'weighted_metrics': None, 'run_eagerly': False, 'steps_per_execution': 1, 'jit_compile': False}

fit_kwargs: {'epochs': 10, 'batch_size': 128, 'callbacks': [<keras.src.callbacks.early_stopping.EarlyStopping object at 0x000002248B460490>]}

Creation date: 2024-11-10 16:57:12

Last fit date: None

Skforecast version: 0.14.0

Python version: 3.11.10

Forecaster id: None

# Fit forecaster

# ==============================================================================

forecaster.fit(data_train)

Epoch 1/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 15s 95ms/step - loss: 0.0127 - val_loss: 0.0103 Epoch 2/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 15s 96ms/step - loss: 0.0049 - val_loss: 0.0094 Epoch 3/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 15s 97ms/step - loss: 0.0043 - val_loss: 0.0096 Epoch 4/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 15s 97ms/step - loss: 0.0040 - val_loss: 0.0085 Epoch 5/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 14s 91ms/step - loss: 0.0038 - val_loss: 0.0075 Epoch 6/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 15s 95ms/step - loss: 0.0036 - val_loss: 0.0075 Epoch 7/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 16s 101ms/step - loss: 0.0035 - val_loss: 0.0071 Epoch 8/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 15s 100ms/step - loss: 0.0034 - val_loss: 0.0069 Epoch 9/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 16s 102ms/step - loss: 0.0033 - val_loss: 0.0078 Epoch 10/10 154/154 ━━━━━━━━━━━━━━━━━━━━ 14s 91ms/step - loss: 0.0033 - val_loss: 0.0075

The prediction can also be made for specific steps, as long as they are within the prediction horizon defined in the model.

# Specific step predictions

# ==============================================================================

forecaster.predict(steps=[1, 5], levels="o3")

| o3 | |

|---|---|

| 2021-04-01 00:00:00 | 35.012405 |

| 2021-04-01 04:00:00 | 22.649246 |

# Prediction

# ==============================================================================

predictions = forecaster.predict()

predictions

| pm2.5 | co | no | o3 | |

|---|---|---|---|---|

| 2021-04-01 00:00:00 | 15.599669 | 0.109202 | 1.619437 | 35.012405 |

| 2021-04-01 01:00:00 | 14.671235 | 0.106962 | 1.450715 | 34.472530 |

| 2021-04-01 02:00:00 | 14.424109 | 0.105125 | 1.828962 | 31.557627 |

| 2021-04-01 03:00:00 | 13.930913 | 0.111892 | 2.649584 | 24.926977 |

| 2021-04-01 04:00:00 | 13.879259 | 0.114469 | 2.742949 | 22.649246 |

# Backtesting with test data

# ==============================================================================

cv = TimeSeriesFold(

steps = forecaster.max_step,

initial_train_size = len(data.loc[:end_validation, :]), # Training + Validation Data

refit = False

)

metrics, predictions = backtesting_forecaster_multiseries(

forecaster=forecaster,

series=data,

cv=cv,

levels=forecaster.levels,

metric="mean_absolute_error",

verbose=False

)

Epoch 1/10 188/188 ━━━━━━━━━━━━━━━━━━━━ 17s 92ms/step - loss: 0.0032 - val_loss: 0.0074 Epoch 2/10 188/188 ━━━━━━━━━━━━━━━━━━━━ 19s 99ms/step - loss: 0.0032 - val_loss: 0.0068 Epoch 3/10 188/188 ━━━━━━━━━━━━━━━━━━━━ 20s 105ms/step - loss: 0.0031 - val_loss: 0.0069 Epoch 4/10 188/188 ━━━━━━━━━━━━━━━━━━━━ 20s 107ms/step - loss: 0.0031 - val_loss: 0.0069 Epoch 5/10 188/188 ━━━━━━━━━━━━━━━━━━━━ 19s 101ms/step - loss: 0.0030 - val_loss: 0.0075

0%| | 0/442 [00:00<?, ?it/s]

# Backtesting metrics

# ==============================================================================

metrics

| levels | mean_absolute_error | |

|---|---|---|

| 0 | pm2.5 | 3.770513 |

| 1 | co | 0.026040 |

| 2 | no | 2.661118 |

| 3 | o3 | 11.715626 |

| 4 | average | 4.543324 |

| 5 | weighted_average | 4.543324 |

| 6 | pooling | 4.543324 |

# Plot all the predicted variables as rows in the plot

# ==============================================================================

fig, ax = plt.subplots(len(levels), 1, figsize=(8, 3 * len(levels)), sharex=True)

for i, level in enumerate(levels):

data_test[level].plot(ax=ax[i], label="test")

predictions[level].plot(ax=ax[i], label="predictions")

ax[i].set_title(level)

ax[i].legend()

Create and compile Tensorflow models¶

To improve the user experience and speed up the prototyping, development, and production process, skforecast has the create_and_compile_model function, with which, by indicating just a few arguments, the architecture is inferred and the model is created.

series: Time series to be used to train the modellevels: Time series to be predicted.lags: Number of time steps to be used to predict the next value.steps: Number of time steps to be predicted.recurrent_layer: Type of recurrent layer to use. By default, an LSTM layer is used.recurrent_units: Number of units in the recurrent layer. By default, 100 is used. If a list is passed, a recurrent layer will be created for each element in the list.dense_units: Number of units in the dense layer. By default, 64 is used. If a list is passed, a dense layer will be created for each element in the list.optimizer: Optimizer to use. By default, Adam with a learning rate of 0.01 is used.loss: Loss function to use. By default, Mean Squared Error is used.

✎ Note

The following examples use recurrent_layer="LSTM" but it is also possible to use "RNN" layers.

# Create model

# ==============================================================================

series = ["o3"] # Series used as predictors

levels = ["o3"] # Target series to predict

lags = 32 # Past time steps to be used to predict the target

steps = 1 # Future time steps to be predicted

data = air_quality[series].copy()

data_train = air_quality_train[series].copy()

data_val = air_quality_val[series].copy()

data_test = air_quality_test[series].copy()

model = create_and_compile_model(

series=data_train,

levels=levels,

lags=lags,

steps=steps,

recurrent_layer="LSTM",

recurrent_units=4,

dense_units=16,

optimizer=Adam(learning_rate=0.01),

loss=MeanSquaredError()

)

model.summary()

keras version: 3.6.0 Using backend: torch torch version: 2.2.2+cpu

Model: "functional_4"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ input_layer_4 (InputLayer) │ (None, 32, 1) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm_6 (LSTM) │ (None, 4) │ 96 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_10 (Dense) │ (None, 16) │ 80 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_11 (Dense) │ (None, 1) │ 17 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ reshape_4 (Reshape) │ (None, 1, 1) │ 0 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 193 (772.00 B)

Trainable params: 193 (772.00 B)

Non-trainable params: 0 (0.00 B)

In this case, a simple LSTM network is used, with a single recurrent layer with 4 neurons and a hidden dense layer with 16 neurons. The following table shows a detailed description of each layer:

| Layer | Type | Output Shape | Parameters | Description |

|---|---|---|---|---|

| Input Layer (InputLayer) | InputLayer |

(None, 32, 1) |

0 | This is the input layer of the model. It receives sequences of length 32, corresponding to the number of lags with a dimension at each time step. |

| LSTM Layer (Long Short-Term Memory) | LSTM |

(None, 4) |

96 | The LSTM layer is a long and short-term memory layer that processes the input sequence. It has 4 LSTM units and connects to the next layer. |

| First Dense Layer (Dense) | Dense |

(None, 16) |

80 | This is a fully connected layer with 16 units and uses a default activation function (relu) in the provided architecture. |

| Second Dense Layer (Dense) | Dense |

(None, 1) |

17 | Another fully connected dense layer, this time with a single output unit. It also uses a default activation function. |

| Reshape Layer (Reshape) | Reshape |

(None, 1, 1) |

0 | This layer reshapes the output of the previous dense layer to have a specific shape (None, 1, 1). This layer is not strictly necessary, but is included to make the module generalizable to other multi-output forecasting problems. The dimension of this output layer is (None, steps_to_predict_future, series_to_predict). In this case, steps=1 and levels="o3", so the dimension is (None, 1, 1) |

| Total Parameters and Trainable | - | - | 193 | Total Parameters: 193, Trainable Parameters: 193, Non-Trainable Parameters: 0 |

More complex models can be created including:

- Multiple series to be modeled (levels)

- Multiple series to be used as predictors (series)

- Multiple steps to be predicted (steps)

- Multiple lags to be used as predictors (lags)

- Multiple recurrent layers (recurrent_units)

- Multiple dense layers (dense_units)

# Model creation

# ==============================================================================

# Now, we have multiple series and multiple targets

series = ['pm2.5', 'co', 'no', 'no2', 'pm10', 'nox', 'o3', 'veloc.', 'direc.', 'so2']

levels = ['pm2.5', 'co', 'no', "o3"] # Features to predict. It can be all the series or less

lags = 32

steps = 5

data = air_quality[series].copy()

data_train = air_quality_train[series].copy()

data_val = air_quality_val[series].copy()

data_test = air_quality_test[series].copy()

model = create_and_compile_model(

series=data_train,

levels=levels,

lags=lags,

steps=steps,

recurrent_layer="LSTM",

recurrent_units=[100, 50],

dense_units=[64, 32],

optimizer=Adam(learning_rate=0.01),

loss=MeanSquaredError()

)

model.summary()

keras version: 3.6.0 Using backend: torch torch version: 2.2.2+cpu

Model: "functional_5"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ input_layer_5 (InputLayer) │ (None, 32, 10) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm_7 (LSTM) │ (None, 32, 100) │ 44,400 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ lstm_8 (LSTM) │ (None, 50) │ 30,200 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_12 (Dense) │ (None, 64) │ 3,264 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_13 (Dense) │ (None, 32) │ 2,080 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_14 (Dense) │ (None, 20) │ 660 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ reshape_5 (Reshape) │ (None, 5, 4) │ 0 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 80,604 (314.86 KB)

Trainable params: 80,604 (314.86 KB)

Non-trainable params: 0 (0.00 B)

Get training and test matrices¶

# Model creation

# ==============================================================================

series = ['pm2.5', 'co', 'no', 'no2', 'pm10', 'nox', 'o3', 'veloc.', 'direc.', 'so2']

levels = ['pm2.5', 'co', 'no', "o3"] # Features to predict. It can be all the series or less

lags = 10

steps = 5

data = air_quality[series].copy()

data_train = air_quality_train[series].copy()

data_val = air_quality_val[series].copy()

data_test = air_quality_test[series].copy()

model = create_and_compile_model(

series=data_train,

levels=levels,

lags=lags,

steps=steps,

recurrent_layer="LSTM",

recurrent_units=[100, 50],

dense_units=[64, 32],

optimizer=Adam(learning_rate=0.01),

loss=MeanSquaredError()

)

forecaster = ForecasterRnn(

regressor=model,

levels=levels,

steps=steps,

lags=lags,

)

forecaster

keras version: 3.6.0 Using backend: torch torch version: 2.2.2+cpu

=============

ForecasterRnn

=============

Regressor: <Functional name=functional_6, built=True>

Lags: [ 1 2 3 4 5 6 7 8 9 10]

Transformer for series: MinMaxScaler()

Window size: 10

Target series, levels: ['pm2.5', 'co', 'no', 'o3']

Multivariate series (names): None

Maximum steps predicted: [1 2 3 4 5]

Training range: None

Training index type: None

Training index frequency: None

Model parameters: {'name': 'functional_6', 'trainable': True, 'layers': [{'module': 'keras.layers', 'class_name': 'InputLayer', 'config': {'batch_shape': (None, 10, 10), 'dtype': 'float32', 'sparse': False, 'name': 'input_layer_6'}, 'registered_name': None, 'name': 'input_layer_6', 'inbound_nodes': []}, {'module': 'keras.layers', 'class_name': 'LSTM', 'config': {'name': 'lstm_9', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'return_sequences': True, 'return_state': False, 'go_backwards': False, 'stateful': False, 'unroll': False, 'zero_output_for_mask': False, 'units': 100, 'activation': 'relu', 'recurrent_activation': 'sigmoid', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'recurrent_initializer': {'module': 'keras.initializers', 'class_name': 'OrthogonalInitializer', 'config': {'seed': None, 'gain': 1.0}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'unit_forget_bias': True, 'kernel_regularizer': None, 'recurrent_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'recurrent_constraint': None, 'bias_constraint': None, 'dropout': 0.0, 'recurrent_dropout': 0.0, 'seed': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 10, 10)}, 'name': 'lstm_9', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 10, 10), 'dtype': 'float32', 'keras_history': ['input_layer_6', 0, 0]}},), 'kwargs': {'training': False, 'mask': None}}]}, {'module': 'keras.layers', 'class_name': 'LSTM', 'config': {'name': 'lstm_10', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'return_sequences': False, 'return_state': False, 'go_backwards': False, 'stateful': False, 'unroll': False, 'zero_output_for_mask': False, 'units': 50, 'activation': 'relu', 'recurrent_activation': 'sigmoid', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'recurrent_initializer': {'module': 'keras.initializers', 'class_name': 'OrthogonalInitializer', 'config': {'seed': None, 'gain': 1.0}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'unit_forget_bias': True, 'kernel_regularizer': None, 'recurrent_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'recurrent_constraint': None, 'bias_constraint': None, 'dropout': 0.0, 'recurrent_dropout': 0.0, 'seed': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 10, 100)}, 'name': 'lstm_10', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 10, 100), 'dtype': 'float32', 'keras_history': ['lstm_9', 0, 0]}},), 'kwargs': {'training': False, 'mask': None}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_15', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 64, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 50)}, 'name': 'dense_15', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 50), 'dtype': 'float32', 'keras_history': ['lstm_10', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_16', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 32, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 64)}, 'name': 'dense_16', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 64), 'dtype': 'float32', 'keras_history': ['dense_15', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Dense', 'config': {'name': 'dense_17', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'units': 20, 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'module': 'keras.initializers', 'class_name': 'GlorotUniform', 'config': {'seed': None}, 'registered_name': None}, 'bias_initializer': {'module': 'keras.initializers', 'class_name': 'Zeros', 'config': {}, 'registered_name': None}, 'kernel_regularizer': None, 'bias_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}, 'registered_name': None, 'build_config': {'input_shape': (None, 32)}, 'name': 'dense_17', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 32), 'dtype': 'float32', 'keras_history': ['dense_16', 0, 0]}},), 'kwargs': {}}]}, {'module': 'keras.layers', 'class_name': 'Reshape', 'config': {'name': 'reshape_6', 'trainable': True, 'dtype': {'module': 'keras', 'class_name': 'DTypePolicy', 'config': {'name': 'float32'}, 'registered_name': None}, 'target_shape': (5, 4)}, 'registered_name': None, 'build_config': {'input_shape': (None, 20)}, 'name': 'reshape_6', 'inbound_nodes': [{'args': ({'class_name': '__keras_tensor__', 'config': {'shape': (None, 20), 'dtype': 'float32', 'keras_history': ['dense_17', 0, 0]}},), 'kwargs': {}}]}], 'input_layers': [['input_layer_6', 0, 0]], 'output_layers': [['reshape_6', 0, 0]]}